Python libraries for natural language processing make it easy to tackle text analysis, language translation, and sentiment analysis. With tools like NLTK and SpaCy, Python’s NLP capabilities offer accessible and efficient solutions for developers at any level. In layman’s words, the purpose of Natural Language Processing (NLP) is to create technologies that enable computers to understand human communication. Applying NLP, computers can search for patterns through an ocean of text and make sense of the data. This method is based on machine learning and deep learning algorithms.

NLP serves as a point of intersection for data science and AI, its core being to teach machines how to understand spoken or written human languages and make sense of textual content. In this blog, we will explore top Python libraries for NLP to guide the developers.

The progressively rising popularity of NLP among organizations is generally attributed to their ability to provide valuable insight on issues related to language and consumers that may come up during product interactions.

Big technology companies such as “Google”, “Amazon” and “Facebook” are pouring huge funds (sums) into NLP research to improve their chatbots, virtual assistants, recommendation engines and other machine learning-driven solutions.

Owing to most NLP solutions being dependent on high computing power, developers need to have access to advanced tools that will help them find the best solutions for NLP methods and algorithms. This allows for the emergence of services that are rich enough to handle complex natural languages.

What is an Natural languages Processing library

An NLP library generally is a toolkit that provides a set of pre-built tools to simplify tasks related to text preprocessing in natural language processing projects. Earlier, NLP projects were limited only to specialists who were equipped with specific skills. Today, by using these libraries text processing can be simplified. This opens up more time for developers to concentrate on developing the machine learning model. These libraries tackle different NLP issues and have become a major trend among Python developers, helping them to come up with high-quality projects.

Top Prominent Python Libraries For Natural language Processing

Python being a popular general-purpose programming language, provides an extremely vast set of tools for almost every application. Accordingly, we have curated a concise selection of ten notable NLP libraries for your consideration. In Current Era Mostly python Libraries for Natural language Processing is used.

List Of Python Libraries for Natural language processing

List of Python Libraries for natural language Processing NLP and Detail of Each Libraries Given Below.

- Natural Language Toolkit (NLTK)

- spaCy

- TextBlob

- Gensim

- CoreNLP

- Pattern

- polyglot

- PyNLPI

- PyNLPI

- scikit-learn

- PyTorch

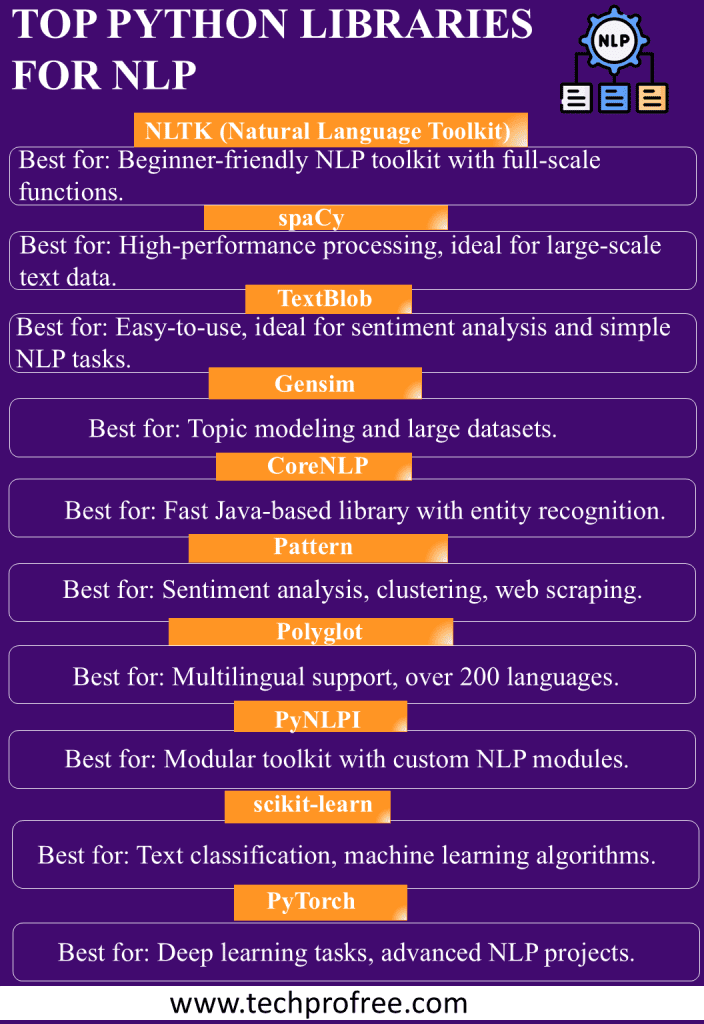

Natural Language Toolkit (NLTK)

The NLTK is on many listings in the search for “Python NLP libraries” and it is so due to its prominence. Well known for its full-scale package of functions, it is the Python library for NLP tasks in the main line. Despite its intricacy, it continues to prevail as a favorite among the beginners because of its wide coverage and support of the linguistic systems.

spaCy

spaCy is the machine learning library that is widely used and is designed to bring production-grade performance. Its ability to process huge quantities of text makes it a good choice for statistical NLP as, in most cases, such tasks involve a large amount of data. In comparison to some competitors, spacy might be less flexible, yet its user-friendly interface makes it easily accessible for both beginners and advanced users, supporting tokenization of more than 50 languages and offering features like word vectors and statistical models.

TextBlob

Although TextBlob cannot be compared to the full-functional toolkits, it is an ideal tool to get the firsthand NLP experience. Its easy-to-use UI allows NLP developers to play around with different NLP applications, allowing them to carry out noun phrase extraction and sentiment analysis, among others.

Gensim

Gensim, in addition to NLTK, is perhaps the most frequently used NLP library. Initially, it was designed for topic modeling but its flexibility made it adaptable to other NLP challenges. However, it handles larger inputs which cannot be handled by RAM space through algorithms such as LS and LDA. Even though it seems to be a pragmatic tool on the surface, Gensim’s user interface is still easy to use, helping with tasks like, text similarity retrieval or document vectorization.

CoreNLP

Originating from Stanford University, CoreNLP adopts one of the fastest Java-based libraries. CoreNLP is considered to be a very handy tool with a broad range of text property extraction capabilities like named-entity recognition and it is praised for its simplicity and adaptability. While supporting five languages and featuring essential NLP tools, its user interface may appear dated to those accustomed to modern design aesthetics.

Pattern

The pattern represents a full-featured NLP library, providing a wide range of functions like “sentiment analysis”, “SVM”, “clustering”, “WordNet”, “POS tagging”, “DOM parsers”, “web crawlers” and many others. Its versatility not only includes data mining and visualization tasks but also goes to the level of doing analysis. Remarkable features that include the understating of facts from opinions and the ability to differentiate comparatives and superlatives are worth putting into consideration.

polyglot

Polyglot stands alone among all other libraries, with support for over 200 human languages, and it is therefore acknowledged as the most multilingual library in existence. Polyglot utilizes NumPy for fast and efficient operation. Being there, it still lags behind NLTK concerning community size, however, it has a significant potential for growth over a long period.

PyNLPI

In a nutshell, the name of PyNLPI, which means “pineapple” when pronounced, implies the offering of special “modules” as a modular NLP library. The interface contains the custom-built Python modules for the NLP tasks, including a FoLiA XML library. As a result, PyNLPI becomes one of the intriguing alternatives for developers who want to flexibly handle their NLP workflows.

scikit-learn

The original scikit-learn is from the SciPy suite of tools but now, it is widely used beyond being associated with Spotify and it offers machine learning algorithms and functionalities for tasks such as text classification, supervised machine learning, and sentiment analysis. Despite being devoid of extensive extra features for deep learning, sci-kit-learn is a robust and trusty tool.

PyTorch

Born of Facebook AI Research, PyTorch offers many powerful features for deep learning tasks albeit this comes at the expense of a much steeper learning curve and with a requirement that one already knows NLP concepts. Being one of the fastest engines, PyTorch is a very good pick for handling highly complex visual data. Hence, it is the software of choice for deep learning projects.

Comparison of Top Python Libraries for Natural Language Processing

Comparison of Top Python Libraries for Natural Language Processing on Based Speed , Ease of use , ideal use cases and best For

| Library | Speed | Ease of Use | Ideal Use Cases | Best for |

| NLTK | Moderate | Beginner-friendly | Educational, broad NLP projects | Comprehensive NLP for beginners |

| spaCy | Fast | User-friendly | Large-scale NLP, production apps | High-performance NLP tasks |

| TextBlob | Moderate | Beginner-friendly | Simple NLP tasks, prototyping | Quick sentiment analysis, simple tasks |

| Gensim | High (for large datasets) | Moderate | Topic modeling, document similarity | Large datasets, topic modeling |

| CoreNLP | Fast (Java-based) | Complex for beginners | Text property extraction, entity recognition | Fast entity recognition, multilingual support |

| Pattern | Moderate | Easy | Sentiment analysis, web scraping | Versatile NLP tasks and web scraping |

| Polyglot | Moderate | Easy | Multilingual NLP applications | Multilingual NLP (200+ languages) |

| PyNLPI | Moderate | Moderate | Modular NLP workflows | Custom NLP workflows |

| scikit-learn | High | Moderate | Machine learning tasks, text classification | ML-based NLP tasks |

| PyTorch | Fast | Steep learning curve | Deep learning NLP projects | Deep learning with NLP |

| Toga | Moderate | Moderate | Native GUI applications across platforms | Creating native GUI for Python apps |

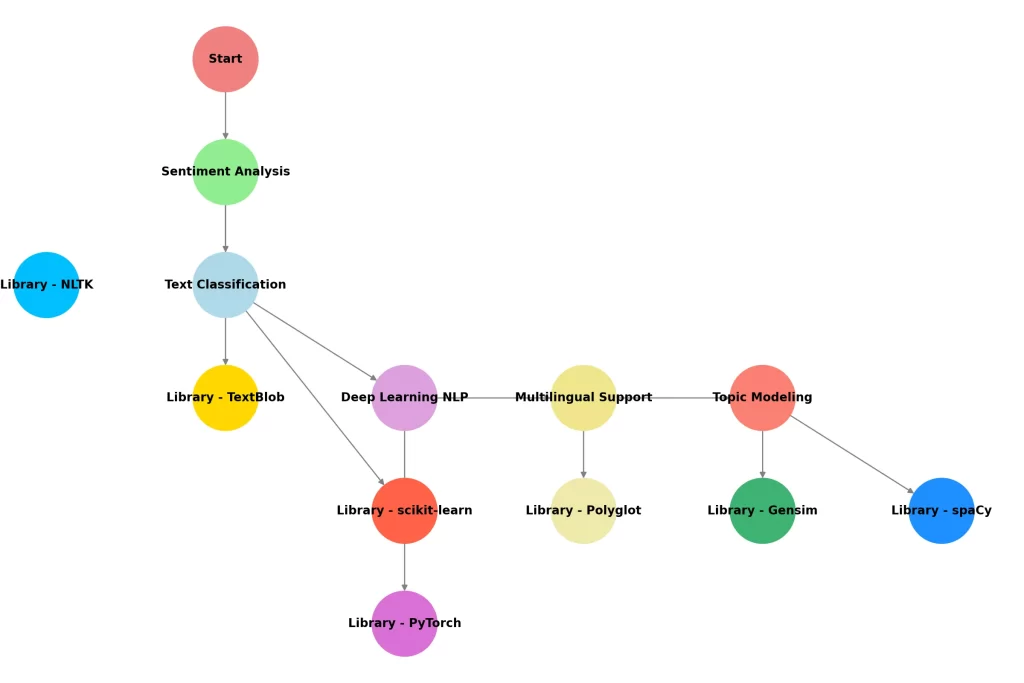

Flowchart For Choosing Python NLP Libraries

Conclusion

While Python has a huge number of NLP libraries, it is not for a single use. The NLTK and spaCy tools are good at the basics, whereas Gensim is suited for large datasets. TextBlob is designed to be a gentle introduction and libraries such as Pattern and scikit-learn provide a broader functionality and utility. The winner is the “Polyglot” in the multilingual support field, and “PyTorch” is the power of deep learning which is needed for complex projects. Through a deep comprehension of such strengths, developers will have the ability to pick the most suitable library so that the power of NLP can be unlocked and only then can the revolution of human-computer interaction be realized.

Leave a Comment