CNN also referred to as ConvNet comprises of neural networks capable of inputting data organized in the form of grids such as images. Pixels of digital images are arranged in a grid with respective values for brightness and colour.

The receptive fields of some neurons in the human brain operate together to cover all visual areas during visual information processing. Likewise, just like in a CNN, each neuron deals with data confined within its own RF. This implies that the network’s layered architecture identifies increasingly sophisticated patterns beginning with simple patterns like lines and curves, till it reaches the complex pattern of faces and objects. It ensures that computers have a human like sense of vision through the application of CNNs.

Particularly, CNN artificial neural networks provide good results on various machine learning problems working with image, audio and text datasets. Depending on the tasks, specialized neural network architectures that include RNNs for sequence prediction or CNNs for image classification will be used.

What are Convolutional Neural Networks?

CNNs constitute one of the most useful parts of machine learning, which is an essential element in deep learning algorithms. Weights are associated with nodes that activate upon exceeding defined thresholds thus enabling data transmission for upstream layer in the network. On the other hand, if the output falls below the threshold, none of the data advances towards the subsequent layer.

A recurrent neural network finds its use in natural language processing and speech recognition; a convolutional neural network is used often for classification and computer visions. Unlike traditional manual feature extraction approaches used in image object recognition, the CNN paradigm is more flexible and exploits matrix multiplications for pattern recognition within images. CNNs are good at image classification and object recognition, most of which have significant computational demands requiring training on GPU.

Visit watsonx.ai, a modern creative studio that uses foundational models and combines traditional machine learning with advanced generative AI technology, including GPT.

How CNN Functions?

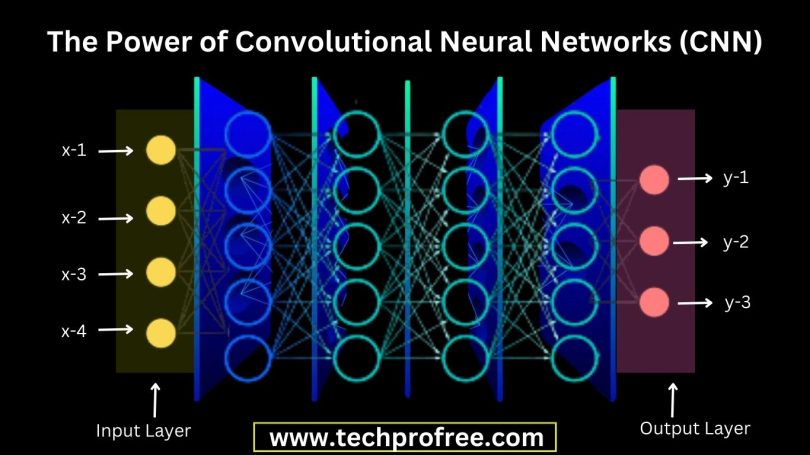

They excel beyond alternative neural networks when applied to image, speech, or audio signal inputs. Neural networks contain 3 layers:

- Convolutional

- Pooling

- Fully-connected

The first layer in the convolutional network is the convolutional layer. The last layer is the fully connected layer, and it may be followed by additional convolution layers. With each layer, the CNN increases in complexity, recognizing more extensive portions. Simple features like colours and edges form earlier layers. As the object becomes visible on the image data, it is identified by the layers of a CNN progressively, starting from larger elements or shapes of the object till it gets to its object.

- Convolutional Layer:

A convolutional layer forms the heart of a CNN and constitutes most of the computations. Suppose the input is an RGB image consisting of three-dimensional pixels. That is, the height, width and depth of the input are represented by RGB of an image. We also possess a feature detector that will slide along the receptive field of this image and check whether or not the component has been found. The operation through which occurs is called a convolution.

One part of the image is represented by the 2-D array of weights known as the feature detector. The filters are adjustable as they may differ in their sizes however, the norm is a 3 x 3 matrix which also controls the receptive field’s size. A dot product is computed in a particular region of an image by applying the filter to that area. The input of this dot product is an array which is then sent into an output array. The filter then moves a stride and continues this process till all areas of the image are covered. The last in the line of dot products between the input and the filter is called feature map, activation or simply convoluted feature.

When it slides through an image, the weight remains unchanged, a process commonly referred to as parameter sharing in the feature detector. Other variables such as the weights are tuned during training using the process of backpropagation and gradient descent Before initiating the training of the neural network, it is essential to predefine three hyperparameters that impact the output volume size.

- Among these, the depth of the output is contingent on the quantity of filters employed. As an illustration, three separate filters would result in three disparate feature maps with a depth of three.

- The stride, indicated by the number of pixels covered by the kernel across the input matrix, is a critical factor among the predefined hyperparameters. Although large stride values of two and others are not common, a big step produces little output.

Zero-Padding:

The output is expanded or equal in size to the input if the filters don’t align with the input image and elements outside the input matrix are set to be zero for zero-padding. Three padding types include:

Valid padding: It is referred to as no padding and drops the last convolution when there is a misalignment in dimensions.

Same padding: It guarantees that the output layer maintains an identical size to that of the input layer.

Full padding: It adds zeros to the input border and increments output size.

After the first convolution, an additional convolution layer can be added, building a hierarchical CNN. The feature hierarchy involves the subsequent layers perceiving pixels in prior layers’ receptive fields. For example, classifying or identifying a bicycle image comprises identifying its elements such as frame, handlebars, wheels and pedals.

- Pooling Layers:

Pooling layers or downsampling diminish the number of the inputs and apply a filter over the entire input, without weights. For average pooling, the mean value is used while max pooling selects the maximum pixel value for the output array which is often preferred. The pooling layer eliminates some data, yet it benefits CNN by minimizing complexity, boosting efficiency and reducing the risk of over fitting.

- Fully Connected Layer:

Unlike partially-connected layers, the fully-connected layer establishes a direct connection between every pixel value in the input image and the output layer. Each node of the output layer has a directly linked node from the preceding layer.

Fully connected layers often apply a softmax activation function that gives an outcome value between 0 and 1 to enable correct input classification according to the input.

Types of Convolutional Neural Networks:

The underpinnings of CNNs find their roots in the groundbreaking research conducted by Kunihiko Fukushima and Yann LeCun during the 1980s (link resides outside IBM). Yann LeCun notably applied backpropagation techniques in the 1990s to train neural networks, specifically for the purpose of pattern recognition in handwritten zip codes, as documented in “Backpropagation Applied to Handwritten Zip Code Recognition.” His subsequent work led to LeNet-5, a document recognition application based on proven principles.

Subsequently, numerous CNN architectures were developed, incorporating new datasets such as MNIST and CIFAR-10, and participating in competitions like the ILSVRC. Distinctive architectures encompass:

AlexNet

VGGNet

GoogLeNet

ResNet

ZFNet

LeNet-5 (it remains as the original CNN architecture).

Conclusion:

The development of convolutional neural networks (CNNs) has transformed the area of computer vision with outstanding performance in different tasks like image classification, object detection or image segmentation. They have a very particular architecture which has been created using the model of human vision. Due to that, they can perceive complex patterns or extract significant characteristics from images with great precision. With time, CNNs will shape the future of artificial intelligence even more.

Leave a Comment